All posts in Paper Reviews .

- Home

- Paper Reviews

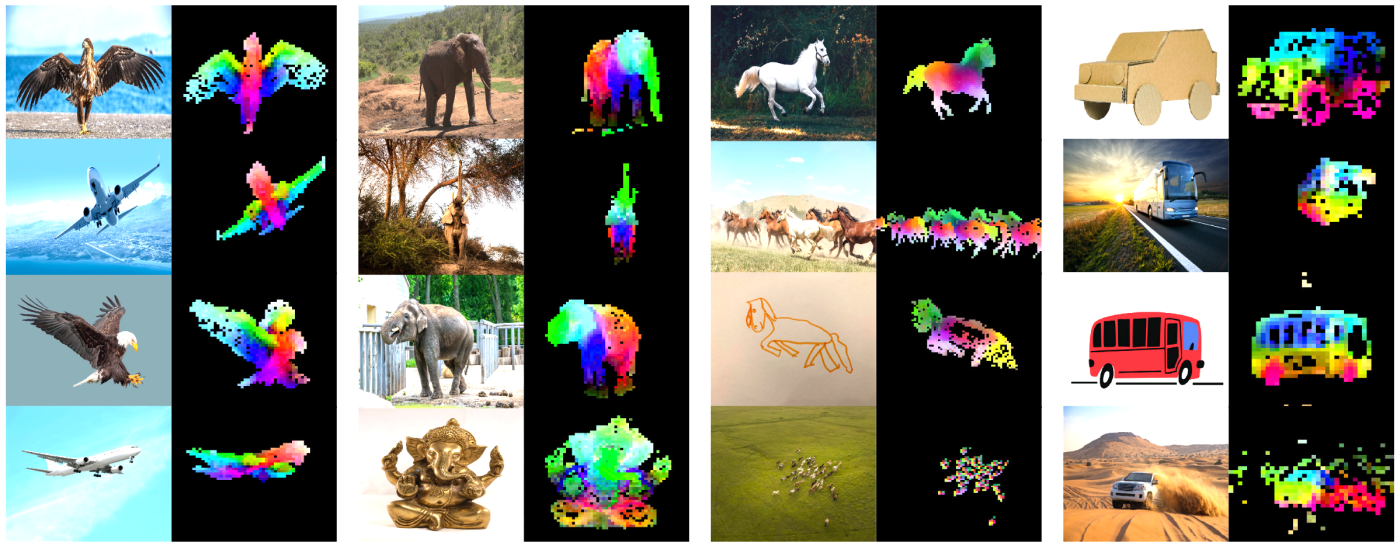

Let’s talk about DINOv2, a paper that takes a major leap forward in the quest for general-purpose visual features in computer vision. Inspired by the success of large-scale self-supervised models in NLP (think GPTs and T5), the authors at Meta have built a visual foundation

DeepSeek-V2 introduces a major architectural innovation that enhances its efficiency as a language model – Multi-Headed Latent Attention (MLA). MLA stands out as a game-changing technique that significantly reduces memory overhead while maintaining strong performance. In this post, we will explore the fundamental concepts behind

Today we’re going to dive into a fascinating paper called “LOGIC-LM: Empowering Large Language Models with Symbolic Solvers for Faithful Logical Reasoning.” The authors of this paper claim that Large Language Models (LLMs) often struggle when faced with complex logical reasoning problems. Well, LLMs primarily

Categories

Sagar Manglani

manglanisagar@gmail.com

Blogger

I write articles on computer vision and robotics.