By Sagar in Discussions

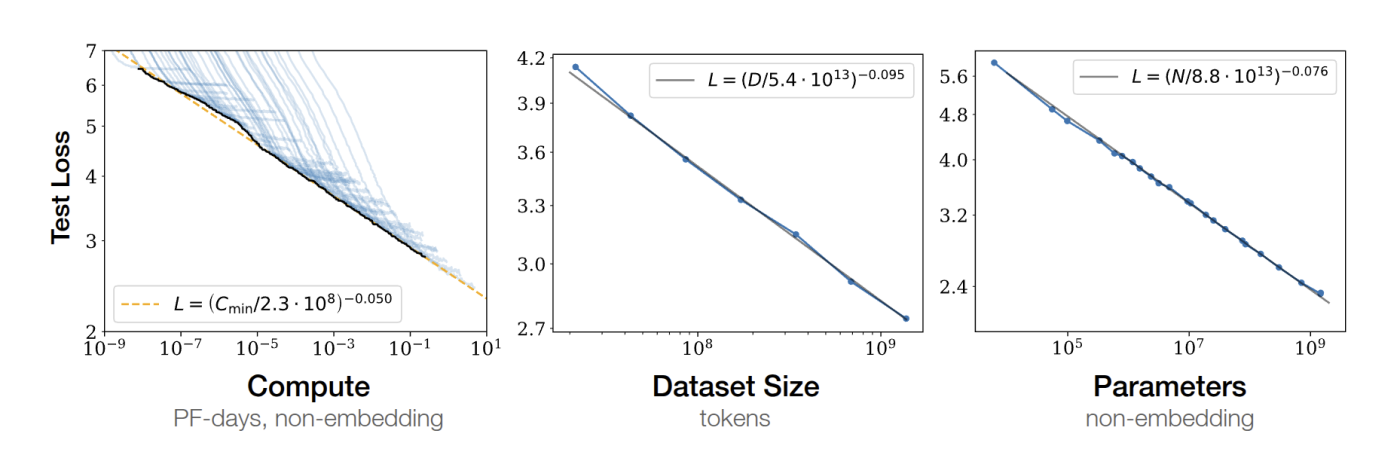

Introduction Scaling up machine learning models – in terms of model size, dataset size, and compute – has led to dramatic improvements in performance on language and vision tasks. In

Motivation Autonomous vehicles rely on different sensors (cameras, LiDAR, and radar) each offering a unique view of the world. Combining them is key to reliable perception, but it’s tricky because

Robots in unstructured spaces, such as homes, have struggled to generalize across unpredictable settings. In this context, a few projects stand out more than the rest – for example, the

Let’s talk about DINOv2, a paper that takes a major leap forward in the quest for general-purpose visual features in computer vision. Inspired by the success of large-scale self-supervised models

DeepSeek-V2 introduces a major architectural innovation that enhances its efficiency as a language model – Multi-Headed Latent Attention (MLA). MLA stands out as a game-changing technique that significantly reduces memory

Today we’re going to dive into a fascinating paper called “LOGIC-LM: Empowering Large Language Models with Symbolic Solvers for Faithful Logical Reasoning.” The authors of this paper claim that Large Language Models (LLMs) often struggle when faced with complex logical reasoning problems. Well, LLMs primarily

Categories

Sagar Manglani

manglanisagar@gmail.com

Blogger

I write articles on computer vision and robotics.