DeepSeek-V2 introduces a major architectural innovation that enhances its efficiency as a language model – Multi-Headed Latent Attention (MLA). MLA stands out as a game-changing technique that significantly reduces memory overhead while maintaining strong performance. In this post, we will explore the fundamental concepts behind MLA, why it is needed, and how it improves upon existing attention mechanisms.

Understanding Multi-Headed Attention (MHA) Mechanism in Transformers

Transformers revolutionized natural language processing with the introduction of the attention mechanism. At its core, attention allows models to weigh the relevance of different input tokens when making predictions, significantly enhancing their ability to process long sequences.

A typical attention mechanism involves three key components:

- Query (Q): Represents the current token looking for relevant information.

- Key (K): Represents candidate tokens that might contain relevant information.

- Value (V): Contains the actual information retrieved when a match between Query and Key is found.

Each token in a sequence computes a similarity score between its query and all keys, which determines how much influence each value has on the final output. This process is repeated across multiple attention heads, allowing the model to focus on different aspects of the input data.

The Memory Bottleneck: Key-Value (KV) Cache

While attention mechanisms are powerful, they come with a major drawback: memory consumption. During inference, transformers store the Key-Value (KV) pairs in memory to avoid redundant computation, enabling fast processing of long sequences. However, as context length increases (such as in DeepSeek-V2’s 128K token context), this KV cache grows exponentially, leading to high memory usage and limiting deployment efficiency.

A key challenge in existing models is that the KV cache grows linearly with sequence length, requiring substantial memory bandwidth during inference. This problem is exacerbated in MoE models, where sparse computation increases computational demands.

Grouped Query Attention (GQA)

One approach to mitigating KV cache growth is Grouped Query Attention (GQA). Instead of each query attending to all keys independently, queries are grouped together and share a smaller set of keys and values. This reduces the number of operations required, leading to more efficient computation without drastically affecting model quality.

While GQA alleviates some memory concerns, it still relies on an explicit KV cache, limiting its effectiveness for extremely long sequences. This is where Multi-Headed Latent Attention (MLA) offers a groundbreaking solution.

Multi-Query Attention (MQA)

Building upon the idea of reducing KV cache size, Multi-Query Attention (MQA) simplifies attention even further. Instead of multiple independent attention heads each with their own keys and values, MQA shares a single set of keys and values across all attention heads. This drastically reduces the memory required for the KV cache while still allowing multi-headed query attention.

However, MQA introduces a trade-off: while it lowers memory costs, it sacrifices some expressiveness compared to full Multi-Headed Attention (MHA) or Grouped Query Attention (GQA). In MHA, each attention head has independent query-key interactions, leading to richer feature representation but at the cost of significantly higher memory usage. GQA strikes a balance between these by grouping queries together to share keys and values.

Multi-Headed Latent Attention (MLA)

MLA fundamentally changes how transformers handle attention by compressing the KV cache into a latent vector, drastically reducing memory requirements without sacrificing performance. Rather than storing every key-value pair explicitly, MLA creates a condensed representation that captures the essence of the attention history. MLA’s approach aligns closely with how neural networks leverage latent spaces to represent complex data efficiently. In deep learning, latent spaces capture compressed representations of inputs while retaining meaningful information. Similarly, MLA transforms the raw KV cache into a latent space, preserving key relationships while dramatically reducing storage needs.

This compression is akin to dimensionality reduction techniques like Principal Component Analysis (PCA) or Variational Autoencoders (VAEs), which distill high-dimensional data into more compact representations without significant information loss. By leveraging a latent space for attention, MLA ensures that long sequences remain computationally feasible without sacrificing contextual richness. By summarizing the KV cache into a latent space, MLA reduces memory consumption by 93.3%. Instead of iterating through long sequences, MLA enables models to process attention more efficiently, leading to a 5.76× increase in maximum generation throughput. Since MLA does not require an ever-growing KV cache, it supports ultra-long contexts without incurring significant memory overhead.

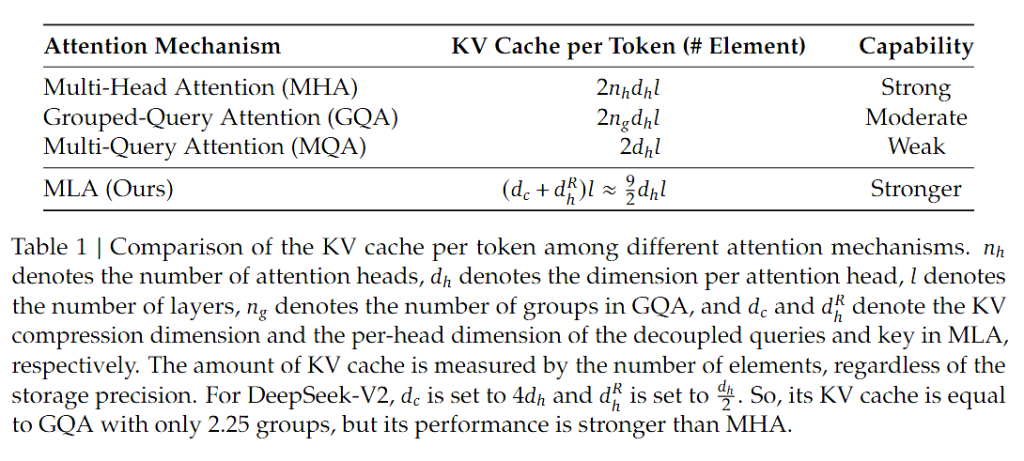

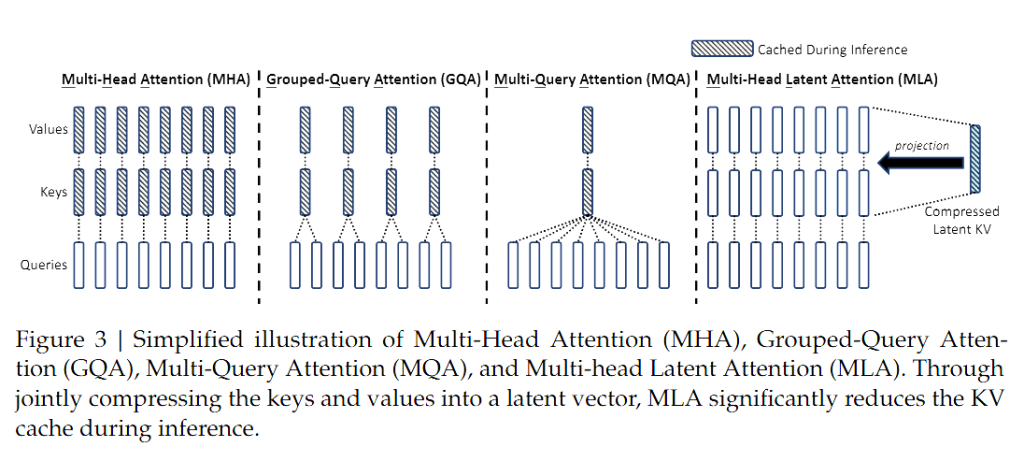

Comparing MHA, GQA, MQA, and MLA

To better understand the impact of MLA, Table 1 in the DeepSeek-V2 paper compares the KV cache sizes for Multi-Headed Attention (MHA), Grouped Query Attention (GQA), Multi-Query Attention (MQA), and MLA. The results highlight MLA’s superior memory efficiency while maintaining competitive performance. Unlike MHA, which stores a full KV cache for each attention head, MLA reduces storage requirements significantly by distilling information into a latent representation. GQA and MQA provide intermediate savings, but neither achieves the extreme compression that MLA offers. Figure 3 further illustrates the efficiency trade-offs among these methods, showing that MLA leads to the most significant KV cache reduction while maintaining strong inference throughput.

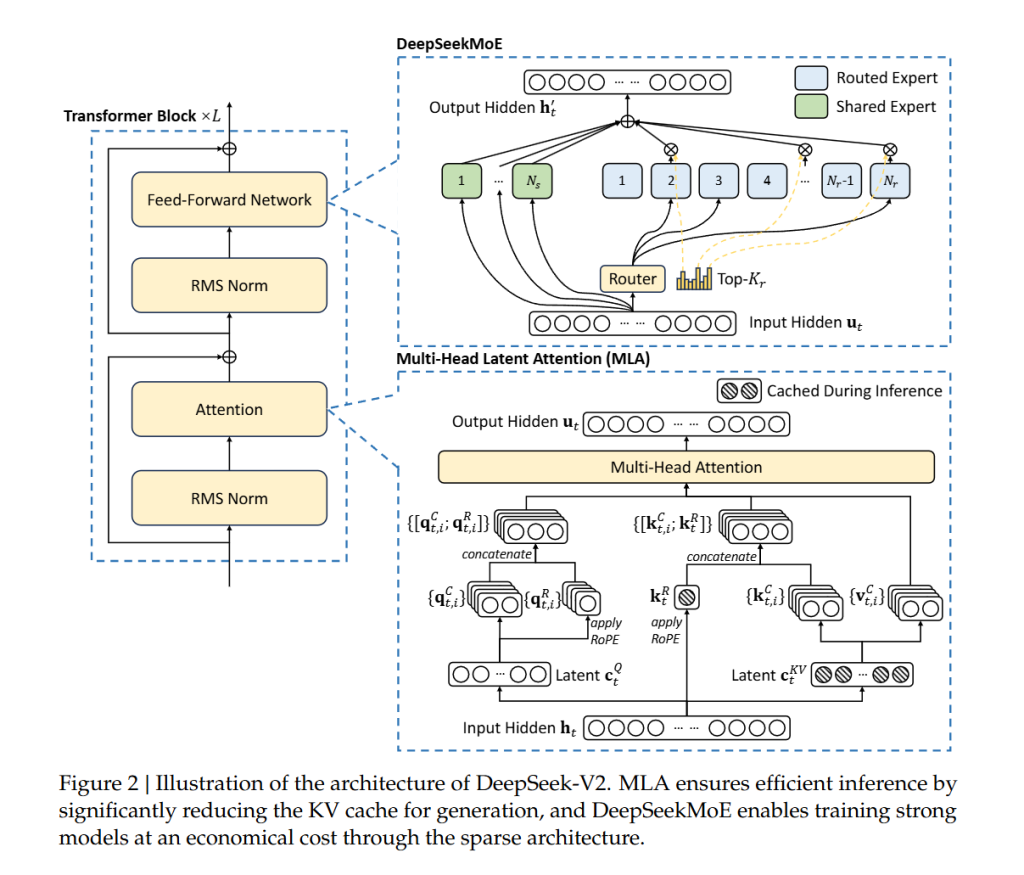

Figure 2 provides insight into how MLA is integrated within the transformer block, showcasing its unique architecture. Unlike MHA, GQA, or MQA, which still depend on a large KV cache, MLA introduces a novel mechanism that minimizes storage overhead while preserving essential information for long-context modeling.

Conclusion

Multi-Headed Latent Attention (MLA) represents a major advancement in transformer architecture, addressing one of the most pressing issues in modern language models: memory efficiency. By moving away from explicit KV caching and leveraging latent space representations, MLA enables DeepSeek-V2 to handle long contexts with reduced memory demands and faster inference speeds.

As models continue to scale, innovations like MLA will play a critical role in ensuring that large-scale AI remains both powerful and deployable. DeepSeek-V2’s adoption of MLA highlights the importance of rethinking traditional attention mechanisms, paving the way for more efficient and scalable transformers in the future.